In the world of modern web development, FastAPI has emerged as a powerful and efficient framework for building high-performance APIs with Python. One of its standout features is its support for asynchronous programming, which allows developers to handle high-concurrency workloads effectively. However, to maintain robust and scalable applications, proper logging is critical. Asynchronous logging in FastAPI ensures that your application remains performant while still capturing essential diagnostic information. In this guide, we’ll explore the ins and outs of implementing asynchronous logging in FastAPI, including best practices, code examples, and performance considerations.

What is Asynchronous Logging?

Logging is the process of recording events, errors, and other relevant information during the execution of an application. In traditional synchronous logging, each log operation (e.g., writing to a file or sending to a remote service) can block the execution of your application, leading to performance bottlenecks in high-traffic scenarios.

Asynchronous logging, on the other hand, allows log operations to be performed non-blocking, meaning the application can continue processing other tasks while the logging operation completes in the background. FastAPI, built on Python’s asyncio and leveraging libraries like Starlette and Uvicorn, is designed to work seamlessly with asynchronous operations, making it an ideal candidate for implementing asynchronous logging.

Why Use Asynchronous Logging in FastAPI?

FastAPI is optimized for asynchronous I/O operations, which makes it perfect for applications that require high concurrency, such as real-time APIs, microservices, or data streaming platforms. Here are some key reasons to use asynchronous logging in FastAPI:

- Non-blocking Performance: Asynchronous logging prevents I/O-bound operations (e.g., writing to a file or sending logs to a remote server) from blocking the main application thread, ensuring smooth handling of requests.

- Scalability: In high-traffic applications, synchronous logging can introduce latency. Asynchronous logging allows your application to scale efficiently under load.

- Flexibility: Asynchronous logging integrates well with modern logging solutions like cloud-based log aggregators (e.g., ELK Stack, Datadog) or message queues (e.g., Kafka, RabbitMQ).

- Error Tracking: Proper logging is essential for debugging and monitoring, and asynchronous logging ensures you capture critical information without sacrificing performance.

Setting Up Asynchronous Logging in FastAPI

To implement asynchronous logging in FastAPI, we’ll combine Python’s built-in logging module with asynchronous handlers and FastAPI’s middleware capabilities. Below is a step-by-step guide to setting up asynchronous logging.

Step 1: Install Required Dependencies

Ensure you have FastAPI and an ASGI server like Uvicorn installed. You may also want to install libraries for advanced logging, such as aiologger for asynchronous logging or structlog for structured logging.

pip install fastapi uvicorn aiologger aiofilesStep 2: Configure Asynchronous Logging

We’ll use aiologger, a Python library designed for asynchronous logging, to handle log operations. Alternatively, you can use Python’s logging module with an asynchronous handler.

Here’s an example of setting up asynchronous logging with aiologger:

import logging

from aiologger import Logger

from aiologger.handlers.files import AsyncFileHandler

from fastapi import FastAPI, Request

# Initialize FastAPI app

app = FastAPI()

# Configure aiologger

logger = Logger(

name="fastapi-app",

level=logging.INFO

)

handler = AsyncFileHandler(filename="app.log")

logger.add_handler(handler)

# Middleware to log requests asynchronously

@app.middleware("http")

async def log_requests(request: Request, call_next):

await logger.info(f"Request {request.method} {request.url}")

response = await call_next(request)

await logger.info(f"Response: {response.status_code}")

return response

@app.get("/")

async def root():

await logger.info("Root endpoint accessed")

return {"message": "Hello, FastAPI!"}

# Run the application with Uvicorn

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Explanation of the Code:

- Logger Setup: We initialize an aiologger.Logger with an AsyncFileHandler to write logs to a file (app.log) asynchronously.

- Middleware for Request Logging: The @app.middleware(“http”) decorator intercepts every HTTP request and logs the method and URL before processing, as well as the response status code after processing.

- Endpoint Logging: The root endpoint (/) logs a message when accessed, demonstrating how to integrate logging into your routes.

- Non-blocking: All log operations are performed asynchronously using await, ensuring they don’t block the main application.

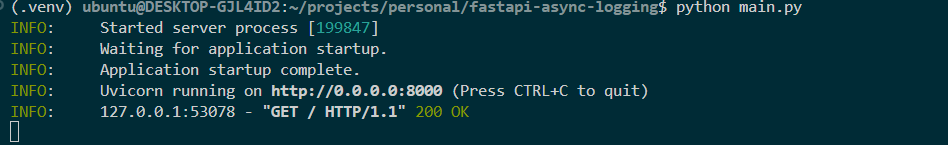

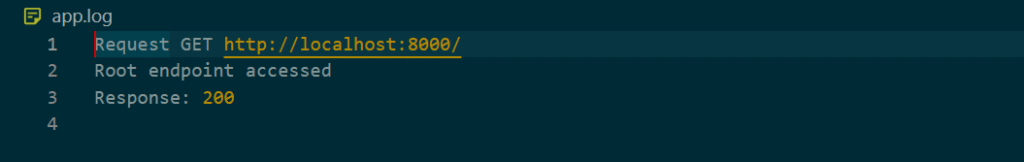

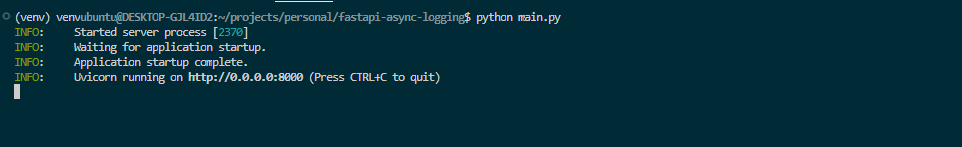

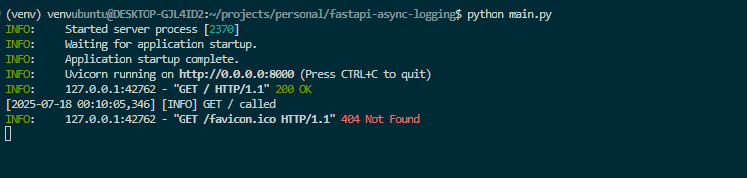

When you run this code and visit: http://localhost:8000, you will see this on your terminal:

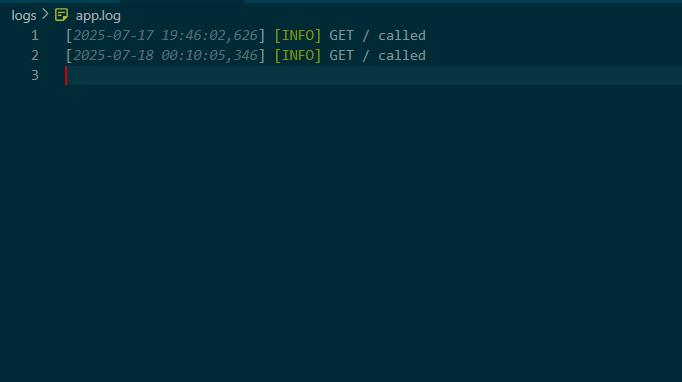

And a file will be created besides your main.py file named “app.log” with this content:

Structured Logging with structlog

For production-grade applications, you’ll likely need more sophisticated logging setups. There is a library called “structlog” which is async ready and can format the logs for a production suit.

Let’s see an example:

Step 1: Install dependencies:

pip install structlog fastapi uvicornStep 2: Configure struct logging and FastAPI:

from fastapi import FastAPI, Request

import structlog

import logging

from logging.handlers import RotatingFileHandler

# Configure Python's logging module

logging.basicConfig(

level=logging.INFO,

format="%(message)s", # Structlog handles formatting

)

# Configure RotatingFileHandler for log rotation

log_handler = RotatingFileHandler(

filename="app.log",

maxBytes=10 * 1024 * 1024, # 10MB per file

backupCount=5, # Keep 5 backup files

)

log_handler.setLevel(logging.INFO)

# Add the handler to the root logger

logging.getLogger("").addHandler(log_handler)

# Configure structlog

structlog.configure(

processors=[

structlog.processors.TimeStamper(fmt="iso"), # Add ISO timestamp

structlog.stdlib.add_log_level, # Add log level

structlog.stdlib.add_logger_name, # Add logger name

structlog.processors.JSONRenderer(), # Render logs as JSON

],

context_class=dict,

logger_factory=structlog.stdlib.LoggerFactory(),

wrapper_class=structlog.stdlib.BoundLogger,

cache_logger_on_first_use=True,

)

# Initialize FastAPI app

app = FastAPI()

# Get structlog logger

logger = structlog.get_logger("fastapi-app")

# Middleware to log requests

@app.middleware("http")

async def log_requests(request: Request, call_next):

await logger.ainfo(

"request",

method=request.method,

url=str(request.url),

client_ip=request.client.host,

)

response = await call_next(request)

await logger.ainfo(

"response",

status_code=response.status_code,

)

return response

@app.get("/")

async def root():

await logger.ainfo("endpoint_accessed", endpoint="/")

return {"message": "Hello, FastAPI!"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)As you can see we are using this pattern:

await logger.ainfo(“blah blah…”, …)

Because the logger we defined is “structlog” we have two kinds of log, one is “logger.info” and the async equivalent of it “logger.ainfo”, look for the letter “a” which is the representative of asynchronous.

Step 3: Running the app

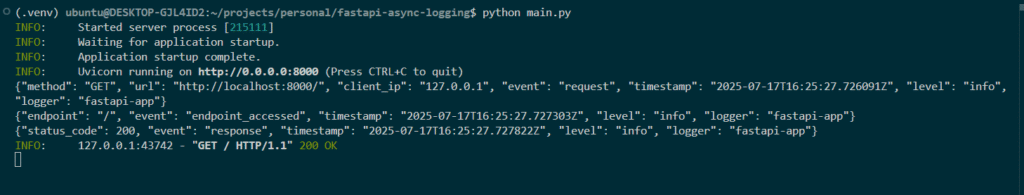

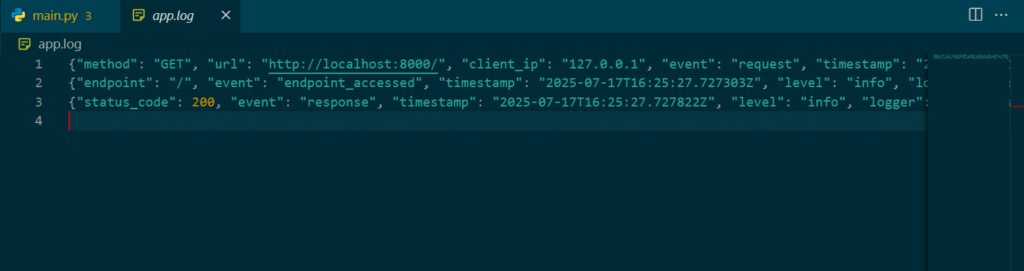

Running the app, and opening the “http://localhost:8000”, you will see something like this:

You can see that the logs are JSON formatted.

A file has been created besides “main.py” named “app.log” with this content:

And you can see the logs printed to the file are JSON formatted too, which useful for different log parser you may want to use in the future.

Async Logging using Queue

If you don’t want to use a third party library for async logging, there is a solution to that, and that is using Queue for logging.

You can use two kind of Queues for logging:

1- External Queue Service (Such as Redis)

2- Simple Python Queue

For the first one we would have another article to demonstrate how we can achieve it.

But for this article we use a Simple Python Queue to demonstrate async logging in FastAPI, Let’s do this.

First create a file named “async_logger.py”, and here is the contents:

import logging

import threading

from queue import SimpleQueue

log_queue = SimpleQueue()

# Base logger config

logger = logging.getLogger("fastapi-logger")

logger.setLevel(logging.INFO)

formatter = logging.Formatter("[%(asctime)s] [%(levelname)s] %(message)s")

# Console handler

console_handler = logging.StreamHandler()

console_handler.setFormatter(formatter)

# File handler

file_handler = logging.FileHandler("logs/app.log", mode="a", encoding="utf-8")

file_handler.setFormatter(formatter)

# Add handlers to logger

logger.addHandler(console_handler)

logger.addHandler(file_handler)

def log_worker():

while True:

record = log_queue.get()

if record is None:

break # Exit signal

logger.handle(record)

def start_log_worker():

thread = threading.Thread(target=log_worker, daemon=True)

thread.start()

return thread

def async_log(level: int, msg: str):

record = logger.makeRecord(

name=logger.name,

level=level,

fn="async_log",

lno=0,

msg=msg,

args=(),

exc_info=None,

)

log_queue.put(record)

This file simply creates a queue for logs and then defines two handlers one for “console” and another for “file”.

As you can see for the logger to be async, we have created a separate thread to do the logging asynchronously.

Now besides the “async_logger.py” create another file named “main.py” which holds your FastAPI app:

from fastapi import FastAPI

from contextlib import asynccontextmanager

from async_logger import async_log, start_log_worker, log_queue

import logging

log_thread = None # Global for reuse

@asynccontextmanager

async def lifespan(app: FastAPI):

global log_thread

log_thread = start_log_worker()

yield # App is running

log_queue.put(None) # Signal logger thread to exit gracefully

app = FastAPI(lifespan=lifespan)

@app.get("/")

async def root():

async_log(logging.INFO, "GET / called")

return {"message": "Logged to console and file asynchronously"}

@app.get("/warn")

async def warn():

async_log(logging.WARNING, "Warning endpoint hit")

return {"message": "Warning logged"}

@app.get("/error")

async def error():

async_log(logging.ERROR, "Something went wrong!")

return {"message": "Error logged"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Here we have imported the “async_logger” module and then we have called the async_log function to do the logging.

As you can see we have used “lifespan” events of FastAPI to have a graceful shutdown.

Make sure to create a folder named “logs” in the same directory as “main.py” and “async_logger.py”.

Let’s run the code by entering this command:

python main.pyWe see:

Now using your browser, visit “http://localhost:8000” and you will see a log like this:

And to see the log in the “app.log” file, you need to open it and you will see something like this:

That’s it, We have successfully created an async logger using SimpleQueue.

Best Practices for Asynchronous Logging in FastAPI

- Use Structured Logging: Structured logs (e.g., JSON) are easier to parse and analyze, especially in distributed systems.

- Avoid Blocking Operations: Ensure all logging operations are asynchronous to maintain FastAPI’s performance benefits.

- Centralize Logs: Use tools like ELK Stack, Datadog, or CloudWatch to aggregate logs from multiple instances of your FastAPI application.

- Log Levels: Use appropriate log levels (DEBUG, INFO, WARNING, ERROR, CRITICAL) to filter logs based on importance.

- Error Handling: Include detailed error information (e.g., stack traces) in logs to aid debugging.

- Monitor Performance: Profile your logging setup to ensure it doesn’t introduce unexpected latency, especially when logging to external services.

- Rotate Logs: For file-based logging, use log rotation to prevent log files from growing indefinitely.

Performance Considerations

Asynchronous logging is designed to minimize performance overhead, but there are still factors to consider:

- I/O Bottlenecks: Writing logs to disk or sending them over the network can still introduce delays if not properly managed. Use buffered or batched logging to reduce I/O operations.

- Resource Usage: Asynchronous logging uses additional memory and CPU resources for managing tasks. Monitor resource usage in production to avoid bottlenecks.

- Queue Overflows: When using message queues, ensure the queue doesn’t overflow under high load. Implement backpressure mechanisms or increase queue capacity as needed.

Conclusion

Asynchronous logging in FastAPI is a powerful technique for building scalable, high-performance APIs. By leveraging libraries like aiologger, structlog, or custom asynchronous handlers, you can ensure that your logging system doesn’t compromise your application’s performance. Whether you’re writing logs to a file, sending them to a remote service, or queuing them for processing, FastAPI’s asynchronous capabilities make it easy to integrate robust logging into your application.

By following the best practices outlined in this guide and using the provided code examples, you can implement a logging system that is both efficient and effective, helping you monitor and debug your FastAPI applications with ease.

Leave a Reply