The Leaky Bucket Algorithm is a widely used rate-limiting mechanism in computer science, particularly in networking, distributed systems, and API management. It regulates the flow of data or requests by modeling a bucket that leaks at a constant rate. This article provides an in-depth exploration of the Leaky Bucket Algorithm, its applications, and step-by-step implementations in Python and Golang. Whether you’re a developer, system architect, or curious learner, this guide will equip you with a solid understanding of the algorithm and practical code examples.

Table of Contents

- What is the Leaky Bucket Algorithm?

- How the Leaky Bucket Algorithm Works

- Applications of the Leaky Bucket Algorithm

- Leaky Bucket vs. Token Bucket

- Implementing the Leaky Bucket Algorithm in Python

- Implementing the Leaky Bucket Algorithm in Golang

- Comparing Python and Golang Implementations

- Challenges and Considerations

- Conclusion

What is the Leaky Bucket Algorithm?

The Leaky Bucket Algorithm is a rate-limiting technique that controls the rate at which requests or data packets are processed. Imagine a bucket with a hole at the bottom. Water (representing requests or data) pours into the bucket at varying rates, but it leaks out at a constant rate. If the bucket overflows due to excessive inflow, the excess water (requests) is discarded or queued, depending on the implementation.

This algorithm ensures that the system processes requests at a steady, predictable rate, preventing overload and maintaining stability. It’s particularly useful in scenarios where bursty traffic needs to be smoothed out, such as in network traffic shaping, API rate limiting, or task scheduling.

Key Characteristics

- Constant Leak Rate: The bucket leaks (processes) requests at a fixed rate.

- Fixed Capacity: The bucket has a limited capacity, representing the maximum number of requests it can hold.

- Overflow Handling: Excess requests are either dropped or queued.

- Time-Based: The algorithm relies on time to determine when to leak (process) requests.

How the Leaky Bucket Algorithm Works

The Leaky Bucket Algorithm operates as follows:

- Bucket Initialization:

- The bucket has a fixed capacity (e.g., 10 requests).

- A constant leak rate defines how many requests are processed per unit of time (e.g., 2 requests per second).

- Request Arrival:

- When a new request arrives, it is added to the bucket if there’s space.

- If the bucket is full, the request is either discarded (in a dropping implementation) or queued (in a queuing implementation).

- Leaking Process:

- At regular intervals (based on the leak rate), requests are removed from the bucket and processed.

- The leak rate ensures that requests are processed at a steady pace, regardless of the arrival rate.

- Time Tracking:

- The algorithm tracks the time since the last leak to calculate how many requests can be processed in the current time window.

Example

Suppose a bucket has a capacity of 10 requests and a leak rate of 2 requests per second. If 15 requests arrive in 1 second:

- 10 requests fill the bucket.

- 5 requests are discarded (or queued).

- After 1 second, 2 requests are processed (leaked), leaving 8 in the bucket.

- After another second, 2 more are processed, and so on.

Applications of the Leaky Bucket Algorithm

The Leaky Bucket Algorithm is versatile and finds applications in various domains:

- Networking: Traffic shaping in routers to prevent network congestion by smoothing bursty traffic.

- API Rate Limiting: Limiting the number of API calls a user can make in a given time period (e.g., 100 requests per minute).

- Task Scheduling: Regulating the execution of tasks in job queues to avoid overwhelming a system.

- Distributed Systems: Managing request rates in microservices to ensure fair resource allocation.

- Database Systems: Controlling the rate of queries to prevent overloading database servers.

Leaky Bucket vs. Token Bucket

The Leaky Bucket Algorithm is often compared to the Token Bucket Algorithm, another popular rate-limiting mechanism. Here’s a quick comparison:

| Feature | Leaky Bucket | Token Bucket |

|---|---|---|

| Flow Control | Smooths out bursty traffic by processing at a constant rate. | Allows bursts up to the number of available tokens. |

| Bucket Content | Holds requests/data. | Holds tokens that represent permission to send requests. |

| Overflow Behavior | Discards or queues excess requests. | Denies requests if no tokens are available. |

| Use Case | Traffic shaping, steady processing. | Burst control, flexible rate limiting. |

The Leaky Bucket is ideal for scenarios requiring consistent output rates, while the Token Bucket is better for allowing controlled bursts.

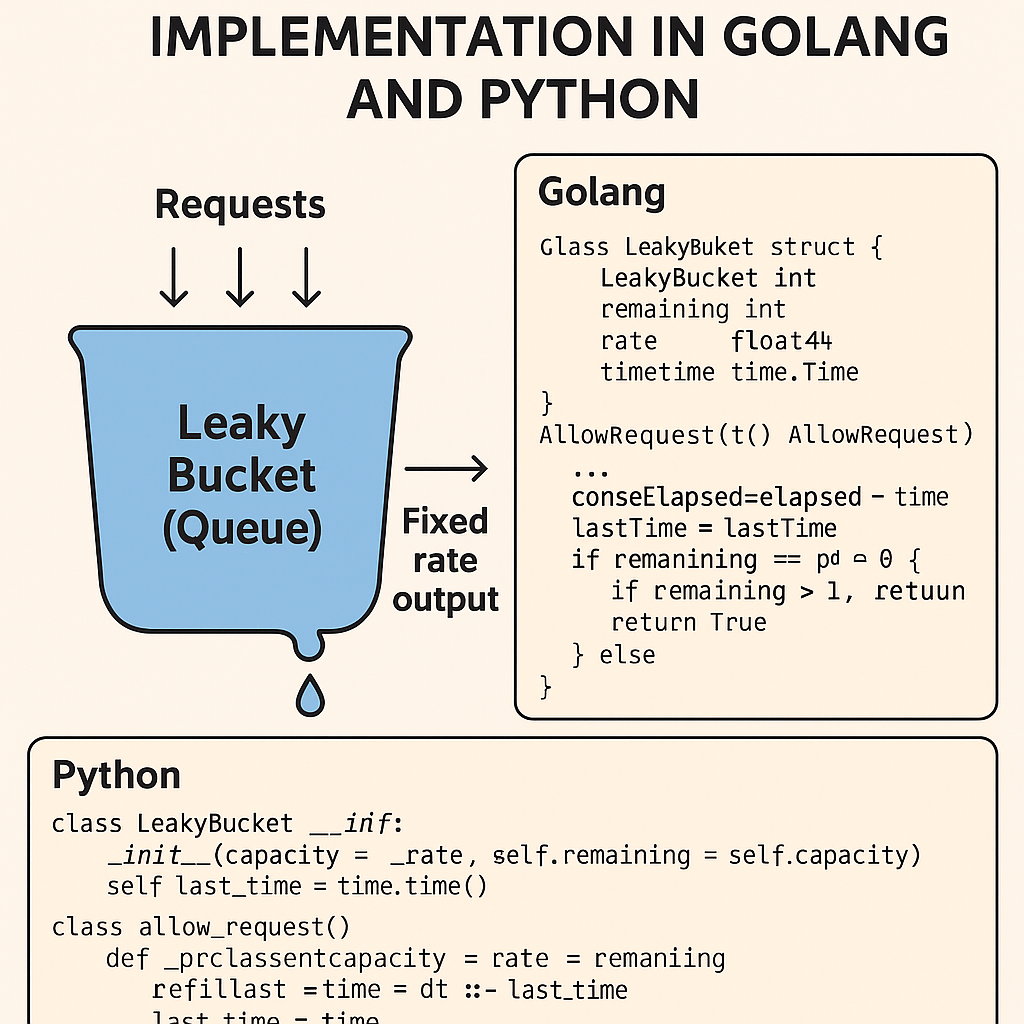

Implementing the Leaky Bucket Algorithm in Python

Below is a Python implementation of the Leaky Bucket Algorithm. This implementation uses a dropping approach, where excess requests are discarded if the bucket is full. It includes time-based leaking and thread-safe handling for concurrent environments.

import time

import threading

from typing import Optional

class LeakyBucket:

def __init__(self, capacity: int, leak_rate: float):

"""

Args:

capacity (int): Maximum number of requests the bucket can hold.

leak_rate (float): Number of requests processes per second

"""

self.capacity = capacity

self.leak_rate = leak_rate

self.bucket = 0 # Current number of requests in the bucket

self.last_leak = time.time()

self.lock = threading.Lock()

def _leak(self) -> None:

"""

Simulate the bucket leaking based on elpased time.

Updates the bucket size by removing requests proportional to time passed.

"""

now = time.time()

elpased = now - self.last_leak

leaked = elpased * self.leak_rate

self.bucket = max(0, self.bucket - leaked)

self.last_leak = now

def add_request(self) -> bool:

"""

Attempt to add a request to the bucket.

Returns:

bool: True if request is added, False if discarded (Bucket Full).

"""

with self.lock:

self._leak() # Update bucket state

if self.bucket < self.capacity:

self.bucket += 1

return True

return False

def get_bucket_size(self) -> float:

"""

Get the current number of requests in the bucket.

Returns:

float: Current bucket size.

"""

with self.lock:

self._leak()

return self.bucket

bucket = LeakyBucket(capacity=20, leak_rate=2)

for i in range(100):

if bucket.add_request():

print(f"Request {i+1} accepted. Bucket size: {bucket.get_bucket_size():.2f}")

else:

print(f"Request {i+1} discarded. Bucket full.")

time.sleep(0.1)Explanation

- Class Structure: The LeakyBucket class encapsulates the bucket’s state and behavior.

- Thread Safety: A threading.Lock ensures safe concurrent access.

- Leaking Mechanism: The _leak method calculates how many requests to remove based on elapsed time and the leak rate.

- Request Handling: The add_request method checks if the bucket has space after leaking and either accepts or discards the request.

- Running: Simulates 100 requests arriving every 0.1 seconds, with a bucket capacity of 20 and a leak rate of 2 requests per second.

Implementing the Leaky Bucket Algorithm in Golang

Below is a Golang implementation of the Leaky Bucket Algorithm. It uses Go’s concurrency features (goroutines and channels) for efficient request handling and is also thread-safe.

package main

import (

"fmt"

"sync"

"time"

)

// LeakyBucket represents the leaky bucket rate limiter

type LeakyBucket struct {

capacity float64 // Maximum number of requests the bucket can hold

leakRate float64 // Requests leaked per second

bucket float64 // Current number of requests in the bucket

lastLeak time.Time // Timestamp of the last leak

mutex sync.Mutex // For thread safety

}

// NewLeakyBucket creates a new LeakyBucket instance

func NewLeakyBucket(capacity, leakRate float64) *LeakyBucket {

return &LeakyBucket{

capacity: capacity,

leakRate: leakRate,

bucket: 0,

lastLeak: time.Now(),

}

}

// leak updates the bucket by removing requests based on elapsed time

func (lb *LeakyBucket) leak() {

now := time.Now()

elapsed := now.Sub(lb.lastLeak).Seconds()

leaked := elapsed * lb.leakRate

lb.bucket = max(0, lb.bucket-leaked)

lb.lastLeak = now

}

// max returns the maximum of two float64 values

func max(a, b float64) float64 {

if a > b {

return a

}

return b

}

// AddRequest attempts to add a request to the bucket

func (lb *LeakyBucket) AddRequest() bool {

lb.mutex.Lock()

defer lb.mutex.Unlock()

lb.leak() // Update bucket state

if lb.bucket < lb.capacity {

lb.bucket++

return true

}

return false

}

// GetBucketSize returns the current number of requests in the bucket

func (lb *LeakyBucket) GetBucketSize() float64 {

lb.mutex.Lock()

defer lb.mutex.Unlock()

lb.leak()

return lb.bucket

}

func main() {

// Bucket with capacity of 10, leaking 2 requests per second

bucket := NewLeakyBucket(20, 2)

// Simulate requests

for i := 0; i < 100; i++ {

if bucket.AddRequest() {

fmt.Printf("Request %d accepted. Bucket size: %.2f\n", i+1, bucket.GetBucketSize())

} else {

fmt.Printf("Request %d discarded. Bucket full.\n", i+1)

}

time.Sleep(100 * time.Millisecond) // Simulate requests every 0.2 seconds

}

}

Explanation

- Struct Definition: The LeakyBucket struct holds the bucket’s state, including capacity, leak rate, and current size.

- Concurrency: A sync.Mutex ensures thread-safe operations.

- Leaking Logic: The leak method calculates the number of requests to remove based on elapsed time.

- Request Handling: The AddRequest method checks bucket capacity after leaking and either accepts or discards the request.

- Main Function: Simulates 100 requests arriving every 0.1 seconds, similar to the Python example.

Comparing Python and Golang Implementations

| Aspect | Python | Golang |

|---|---|---|

| Concurrency | Uses threading.Lock for thread safety. | Uses sync.Mutex for thread safety. |

| Syntax | More concise, dynamic typing. | Static typing, more verbose but explicit. |

| Performance | Slower due to Python’s interpreter overhead. | Faster due to compiled nature of Go. |

| Use Case | Prototyping, scripting, or smaller systems. | High-performance, concurrent systems. |

| Ecosystem | Rich libraries, easier for quick development. | Built-in concurrency, ideal for microservices. |

Challenges and Considerations

- Time Precision: The accuracy of the leak rate depends on the system’s clock precision. Small time drifts can affect the algorithm’s behavior.

- Concurrency: In high-concurrency scenarios, lock contention can become a bottleneck. Consider using lock-free data structures or sharding for scalability.

- Overflow Handling: Deciding whether to drop or queue excess requests depends on the use case. Dropping is simpler but may lead to data loss, while queuing requires additional memory.

- Scalability: For distributed systems, a centralized bucket may introduce latency. Distributed implementations (e.g., using Redis) are often necessary.

- Configuration: Tuning the bucket capacity and leak rate requires careful analysis of the system’s workload and requirements.

Conclusion

The Leaky Bucket Algorithm is a powerful and intuitive method for rate limiting, ensuring systems process requests at a steady rate. Its applications span networking, API management, and task scheduling, making it a staple in system design. The Python and Golang implementations provided above demonstrate how to build a thread-safe, time-based leaky bucket with a dropping approach.

By understanding the algorithm’s mechanics and implementing it in your preferred language, you can effectively manage traffic and prevent system overload. Whether you choose Python for its simplicity or Golang for its performance, the Leaky Bucket Algorithm is a versatile tool for any developer’s toolkit.

For further exploration, consider extending the implementations to support queuing, distributed systems, or integration with frameworks like Flask (Python) or Gin (Golang) for real-world API rate limiting.

Leave a Reply