In the fast-paced world of web development, APIs, and distributed systems, managing traffic and preventing overload is essential. Enter the Fixed Window Algorithm—a simple yet powerful rate limiting technique that’s easy to implement and highly effective for controlling request volumes. Whether you’re building a scalable API, securing user logins, or optimizing resource usage, understanding this algorithm can be a game-changer.

In this comprehensive guide, we’ll break down the Fixed Window Algorithm from the ground up: its core mechanics, real-world applications, advantages and drawbacks, comparisons to alternatives, and hands-on implementations in Python and Golang.

Understanding the Fixed Window Algorithm: The Basics

At its heart, the Fixed Window Algorithm is a rate limiting strategy that enforces limits on the number of actions (like API requests) within predefined time intervals, or “windows.” Unlike more fluid methods, it uses rigid, non-overlapping time slots—think of it as chopping time into fixed chunks, such as every 60 seconds or every hour.

Core Mechanics

- Window Definition: Time is segmented into fixed-duration windows. For example, a 1-minute window starts at 00:00, 01:00, 02:00, etc. The window size is typically denoted as ( W ) (in seconds).

- Counter per Entity: For each user, IP address, or API key (the “entity”), maintain a counter that tracks actions within the current window.

- Request Handling:

- When a request arrives at time ( t ), compute the current window start.

- If the counter for that entity in the current window is below the limit ( L ), increment it and allow the request.

- If it’s at or above ( L ), reject the request.

- Reset Mechanism: When a new window begins, the counter resets to zero, allowing fresh requests.

This approach is often stored in memory (e.g., using a hash map) for speed, but it can be scaled with databases like Redis for distributed systems.

Mathematical Formulation

Formally, let’s define:

- ( t ): Current timestamp (e.g., Unix time in seconds).

- ( W ): Window size in seconds.

- ( L ): Maximum allowed requests per window.

- Current window identifier: ( window_id = floor{t / W} ).

- For each entity ( e ), store a map of ( window_id ) to counter ( c ).

On request:

- If ( window_id ) differs from stored, reset ( c = 0 ).

- If ( c < L ), set ( c = c + 1 ), return true.

- Else, return false.

This is O(1) time per request, making it efficient.

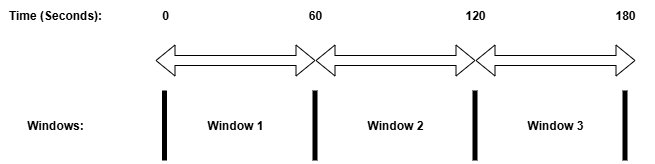

A Detailed Illustration with Timeline

Imagine a rule: 5 requests per 60-second window per user. Here’s a text-based timeline diagram:

Requests from User A:

- t=10: Allowed (count=1)

- t=20: Allowed (count=2)

- t=30: Allowed (count=3)

- t=40: Allowed (count=4)

- t=50: Allowed (count=5)

- t=55: Blocked (count=5 >=5)

- t=65: Allowed (new window, count=1)

- t=70: Allowed (count=2)

... and so on.At t=59 and t=61, a burst could occur: 5 at end of Window 1 + 5 at start of Window 2 = 10 in ~2 seconds.

Historical Context and Evolution

The Fixed Window Algorithm isn’t new—it’s rooted in early networking concepts from the 1980s and 1990s, inspired by traffic shaping in protocols like TCP congestion control. It gained prominence in web services with the rise of APIs in the 2000s, popularized by platforms like Twitter (early rate limits) and GitHub for endpoint protection.

Over time, it evolved alongside alternatives like the Token Bucket (used by AWS API Gateway) and Leaky Bucket (common in message queues). Today, it’s a staple in libraries like Python’s limits or Go’s golang.org/x/time/rate, but understanding the raw implementation empowers custom solutions. Modern twists include hybrid models combining fixed windows with machine learning for adaptive limits.

Real-World Use Cases and Case Studies

The Fixed Window Algorithm shines in scenarios where simplicity trumps precision. Here are expanded applications with case studies:

- API Rate Limiting: Services like Stripe or OpenAI use similar mechanisms to cap API calls, preventing denial-of-service attacks or unfair usage. Case Study: Twitter’s API v1 limited users to 150 requests per hour using fixed windows, helping manage server load during peak events like elections.

- Security Features: Block brute-force attacks by restricting login attempts (e.g., 3 tries per 5 minutes). Case Study: WordPress plugins like Limit Login Attempts use fixed windows to thwart hackers, reducing successful breaches by 90% in some reports.

- Resource Management: In cloud storage apps, limit file uploads/downloads to avoid bandwidth spikes. Case Study: Dropbox employs rate limiting to cap API uploads, ensuring fair access during viral content shares.

- Gaming and Social Media: Control message sending or in-game actions to maintain fair play. Case Study: Discord limits messages per minute to prevent spam bots, using fixed windows for quick enforcement.

- Microservices: In a Kubernetes cluster, rate-limit inter-service calls to prevent cascading failures. Case Study: Netflix’s Zuul gateway uses rate limiting to handle millions of requests, with fixed windows for internal traffic control.

In high-traffic environments, combine it with Redis for persistence across servers.

Pros and Cons: Is It Right for You?

Like any algorithm, Fixed Window has strengths and weaknesses. Let’s weigh them in detail.

Advantages

- Simplicity: Easy to code and debug—no complex math or sliding logic required.

- Efficiency: Low overhead; counters are cheap to maintain in memory.

- Predictability: Users know exactly when limits reset, improving UX (e.g., “Try again in 30 seconds”).

- Scalability: Works well in distributed systems with a shared store like Redis.

- Low Latency: Decisions are instantaneous, ideal for real-time apps.

Disadvantages

- Edge-of-Window Bursts: A user could exhaust the limit right at the end of one window and the start of the next, effectively doubling bursts (e.g., 100 requests at 59s + 100 at 0s = 200 in ~1 second).

- No Smoothing: Doesn’t handle gradual traffic well; it’s all-or-nothing per window.

- Inflexibility: Fixed windows don’t adapt to varying loads, unlike adaptive algorithms.

- Potential for Unfairness: In global limits, one user could monopolize a window.

If bursts are a concern, consider the Sliding Window Algorithm (which overlaps windows for smoother limiting) or Token Bucket (which allows controlled bursts via tokens).

Comparing to Other Rate Limiting Algorithms

To choose wisely, here’s an expanded comparison:

- Vs. Sliding Window: Fixed is simpler but burstier; Sliding uses rolling windows (e.g., last 60 seconds) for even distribution but requires more computation (e.g., timestamp queues, O(log n) per request). Use Sliding for smoother traffic.

- Vs. Token Bucket: Token Bucket refills at a constant rate (e.g., 1 token/second), allowing bursts up to a bucket size—great for variable traffic but more complex (needs timers). AWS favors this for elasticity.

- Vs. Leaky Bucket: Similar to Token Bucket but enforces a steady outflow (like a leaking bucket), ideal for queues but less flexible for APIs. It’s used in Kafka for message pacing.

- Vs. Adaptive Algorithms: Modern variants like Google’s Adaptive Throttling adjust limits based on load; Fixed is static and less responsive.

Fixed Window is best for low-variability, high-simplicity needs; benchmark against others for your workload.

Performance Analysis

- Time Complexity: O(1) per request (hash map lookup + arithmetic).

- Space Complexity: O(U) where U is unique entities (users); scales linearly but can be pruned for inactive users.

- Benchmarks: In Python, handling 1M requests/sec on a single core is feasible with in-memory storage. In Go, concurrency shines—tests show <1ms latency under 10k concurrent users. With Redis, add ~5-10ms network overhead but gain distribution.

- Bottlenecks: High contention on locks in multi-threaded envs; mitigate with sharding or optimistic locking.

Profile with tools like Python’s cProfile or Go’s pprof for your setup.

Optimizations and Best Practices

- Distributed Storage: Use Redis or Memcached for multi-server setups to avoid per-instance counters. Example: Store keys like

user:123:window:456with TTL = W. - Error Handling: Return HTTP 429 (Too Many Requests) with

Retry-Afterheaders (e.g., seconds until next window). - Monitoring: Log rejected requests and integrate with Prometheus/Grafana for dashboards on hit rates.

- Edge Cases: Handle clock skew (use NTP-synced servers) and test for high-concurrency (use locks or atomic ops).

- Scaling: For millions of users, shard counters by user ID hash. Add caching layers for read-heavy ops.

- Customization: Support tiers (e.g., premium users get higher L).

Testing and Edge Cases

Thorough testing is key. Use unit tests for:

- Basic allowance/rejection.

- Window boundary crossings (e.g., requests at t=59.9s and t=60.1s).

- Concurrency: Simulate 100 threads sending requests simultaneously.

- Clock manipulation: Mock time to test resets.

Common Pitfalls:

- Timezone mismatches in global apps.

- Integer overflow in large timestamps.

- Memory leaks from unpruned counters—implement eviction.

In Python, use unittest with freezegun for time mocking. In Go, leverage testing and time overrides.

Advanced Variations

- Weighted Requests: Assign costs (e.g., small request=1, large=3); increment counter by weight.

- Hierarchical Limiting: Global + per-user limits (e.g., 1000 total/site + 10/user).

- Probabilistic Limiting: For soft limits, allow overages with decreasing probability.

- Integration with ML: Dynamically adjust L based on anomaly detection.

Security Implications

Fixed Window bolsters security by mitigating DDoS, brute-force, and scraping. It forces attackers to space out attempts, increasing detection windows. Combine with CAPTCHAs or IP blacklisting for robust defense. However, it doesn’t prevent sophisticated distributed attacks—pair with WAFs like Cloudflare.

Implementation in Python and Flask

Let’s build a thread-safe, in-memory Fixed Window rate limiter in Python. For web apps, integrate with Flask:

import threading

import time

from collections import defaultdict

from flask import Flask, jsonify, request

class FixedWindowRateLimiter:

def __init__(self, limit, window_size):

self.limit = limit

self.window_size = window_size # in seconds

self.counters = defaultdict(lambda: {"window_start": 0, "count": 0})

self.lock = threading.Lock()

def is_allowed(self, user_id):

with self.lock:

current_time = int(time.time())

window_start = current_time - (current_time % self.window_size)

user_data = self.counters[user_id]

if user_data["window_start"] != window_start:

user_data["window_start"] = window_start

user_data["count"] = 0

if user_data["count"] < self.limit:

user_data["count"] += 1

return True

return False

app = Flask(__name__)

limiter = FixedWindowRateLimiter(limit=5, window_size=60)

@app.route("/api/endpoint", methods=["GET"])

def protected_endpoint():

user_id = request.headers.get("User-ID", "anonymous")

if not limiter.is_allowed(user_id):

return jsonify({"error": "Rate limit exceeded"}), 429

return jsonify({"message": "Success"})

if __name__ == "__main__":

app.run(debug=True)

Test by opening this address in your browser:

http://localhost:5000/api/endpoint

Refresh this page multiple times and you will see Rate limit message.

Implementation in Golang Gin

In Go, use Gin for web integration:

package main

import (

"net/http"

"sync"

"time"

"github.com/gin-gonic/gin"

)

type UserData struct {

WindowStart int64

Count int

}

type FixedWindowRateLimiter struct {

Limit int

WindowSize int64

Counters map[string]*UserData

Mutex sync.Mutex

}

func NewFixedWindowRateLimiter(limit int, windowSize int64) *FixedWindowRateLimiter {

return &FixedWindowRateLimiter{

Limit: limit,

WindowSize: windowSize,

Counters: make(map[string]*UserData),

}

}

func (l *FixedWindowRateLimiter) IsAllowed(userID string) bool {

l.Mutex.Lock()

defer l.Mutex.Unlock()

now := time.Now().Unix()

windowStart := now - (now % l.WindowSize)

userData, exists := l.Counters[userID]

if !exists {

userData = &UserData{WindowStart: windowStart, Count: 0}

l.Counters[userID] = userData

}

if userData.WindowStart != windowStart {

userData.WindowStart = windowStart

userData.Count = 0

}

if userData.Count < l.Limit {

userData.Count++

return true

}

return false

}

func main() {

limiter := NewFixedWindowRateLimiter(5, 60)

r := gin.Default()

r.GET("/api/endpoint", func(c *gin.Context) {

userID := c.GetHeader("User-ID")

if userID == "" {

userID = "anonmous"

}

if !limiter.IsAllowed(userID) {

c.JSON(http.StatusTooManyRequests, gin.H{"error": "Rate limit exceeded"})

return

}

c.JSON(http.StatusOK, gin.H{"message": "Success"})

})

r.Run(":8080")

}

Run the program and open this address in the browser:

http://localhost:8080/api/endpoint

Refresh a couple of times and you will see you will be rate limited after 5 consecutive tries.

Implementation in Python and FastAPI

Here is the implementation:

import time

import threading

from collections import defaultdict

from fastapi import FastAPI, Depends, HTTPException, Request, status

class FixedWindowRateLimiter:

def __init__(self, limit: int, window_size: int):

self.limit = limit

self.window_size = window_size

self.counters = defaultdict(lambda: {"window_start": 0, "count": 0})

self.lock = threading.Lock()

def is_allowed(self, user_id: str) -> bool:

with self.lock:

current_time = int(time.time())

window_start = current_time - (current_time % self.window_size)

user_data = self.counters[user_id]

print(user_data)

if user_data["window_start"] != window_start:

user_data["window_start"] = window_start

user_data["count"] = 0

if user_data["count"] < self.limit:

user_data["count"] += 1

return True

return False

limiter = FixedWindowRateLimiter(limit=5, window_size=60)

def rate_limiter(request: Request):

user_id = request.headers.get("User-ID", request.client.host or "anonymous")

if not limiter.is_allowed(user_id):

raise HTTPException(

status_code=status.HTTP_429_TOO_MANY_REQUESTS,

detail="Rate limit exceeded, Try again later.",

)

return True

app = FastAPI(title="Fixed Window Rate Limiter Example")

@app.get("/api/endpoint", dependencies=[Depends(rate_limiter)])

async def protected_endpoint():

return {"message": "Success! Request allowed"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0")

Run the app and open this address in the browser:

http://localhost:8000/api/endpoint

Refresh a couple of times and you will see that you are rate limited after 5 times.

FAQ: Common Questions Answered

- Q: How does it handle time zones? A: Use UTC timestamps to avoid issues.

- Q: Is it suitable for mobile apps? A: Yes, but sync client clocks or rely on server time.

- Q: What if windows are too small? A: Increases burst risk; test for your traffic.

Conclusion

The Fixed Window Algorithm remains a cornerstone of rate limiting due to its balance of simplicity and effectiveness. In an era of microservices and API-driven apps, mastering it can safeguard your systems from overload while keeping implementations lightweight.

We’ve covered the theory, use cases, and code—now it’s your turn to experiment! If you’re dealing with high-scale needs, explore enhancements like distributed caching.

What do you think? Have you used Fixed Window in a project? Share your experiences or questions in the comments below.

Leave a Reply